You’ve seen them.

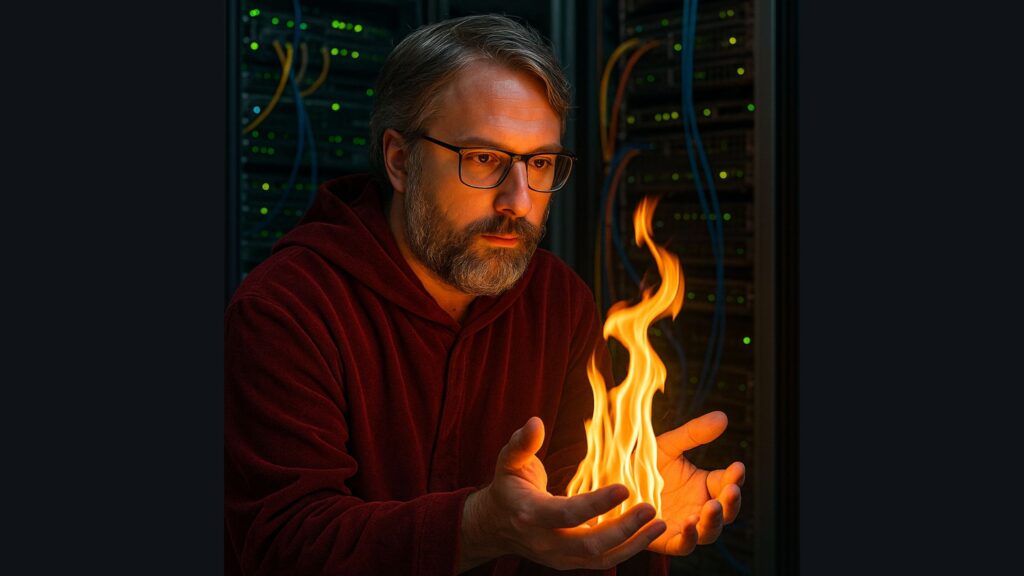

The self-declared AI builders who’ve discovered ChatGPT’s code generation and now think they’ve unlocked developer superpowers.

They copy, paste, tweak a few lines, and ship an app before your leftovers are warm in the microwave.

No Git hygiene. No input validation. No tests. And definitely no clue.

But hey—it compiles, so it must be fine, right?

Wrong.

This isn’t just annoying. It’s dangerous. For users. For companies. For the real engineers who end up cleaning up the rubble after the vibe wore off and the thing exploded in prod.

Let’s Talk About Where That Code Comes From

Vibe coders love to say, “The AI wrote it.”

But AI doesn’t create in a vacuum. It’s trained on other people’s work.

Some of that code came from Stack Overflow.

Some from public GitHub repos with licenses that you probably violated.

Some from places you really don’t want to be quoting in your codebase.

But vibe coders don’t stop to check. They don’t ask:

- Is this code licensed for commercial use?

- Was it secure when it was first written?

- Has it been updated since Heartbleed? And hey, nevermind, what is Heartbleed?

- Does it actually solve the right problem, or does it just look like it does?

Because vibe coding isn’t about understanding. It’s about getting it done fast.

And if that means hardcoding credentials or skipping auth because “it’s just a prototype,” so be it.

When Developers Object, They’re Not Gatekeeping—They’re Protecting You

You know that annoying developer who keeps asking where the secrets are stored, or why your REST endpoints don’t require auth? The one who insists you shouldn’t trust user input, and who dares to bring up concepts like “audit logs” and “input sanitation”?

That person isn’t trying to slow you down.

They’re trying to keep your company off the front page.

Security isn’t overhead. It’s part of responsible engineering.

And when you skip that part because ChatGPT gave you a neat code snippet you’re not innovating.

You’re creating work for the devs who’ll have to patch the leaks after you were fire for the data breach.

Lunch Break Code Has a Cost

You can ship an MVP in a lunch break.

You just won’t be around to fix it when it starts charging people twice, leaking credentials, or exposing your admin panel to the internet.

Worse, you won’t understand what happened.

Because you didn’t write the logic—you just vibed it into existence.

And let’s be clear:

The judge, the auditor, or the regulator isn’t going to care that ChatGPT said it was fine.

They’ll ask who deployed it. Who reviewed it. Who owned the risk.

And if that’s you?

Good luck.

A Note to Real Developers: Start Charging Triple

If you’re the engineer who gets called in to fix the vibe-coded monstrosity after it hits prod, triple your rate.

You’re not debugging code. You’re reverse-engineering a hallucination.

You’re tracing variables through copy-pasted fragments with no context.

You’re deciphering architecture decisions made by someone who didn’t know what CORS was.

And you’re doing all of this under pressure, because “the app worked yesterday.”

Charge accordingly.

Final Thought: AI Is an Accelerator, Not a Shield

AI is a fantastic tool. It can scaffold code, suggest patterns, and even teach you concepts.

But if you don’t know what you’re pasting or why it works you’re not coding. You’re gambling.

And in regulated industries or high-stakes systems, guess what?

That gamble doesn’t just land in prod. It lands in court.

So before you “just build it,” ask yourself:

Would I bet my company on this?

Would I bet my job?

Because if the answer is no then maybe it’s time to stop coding on vibes.